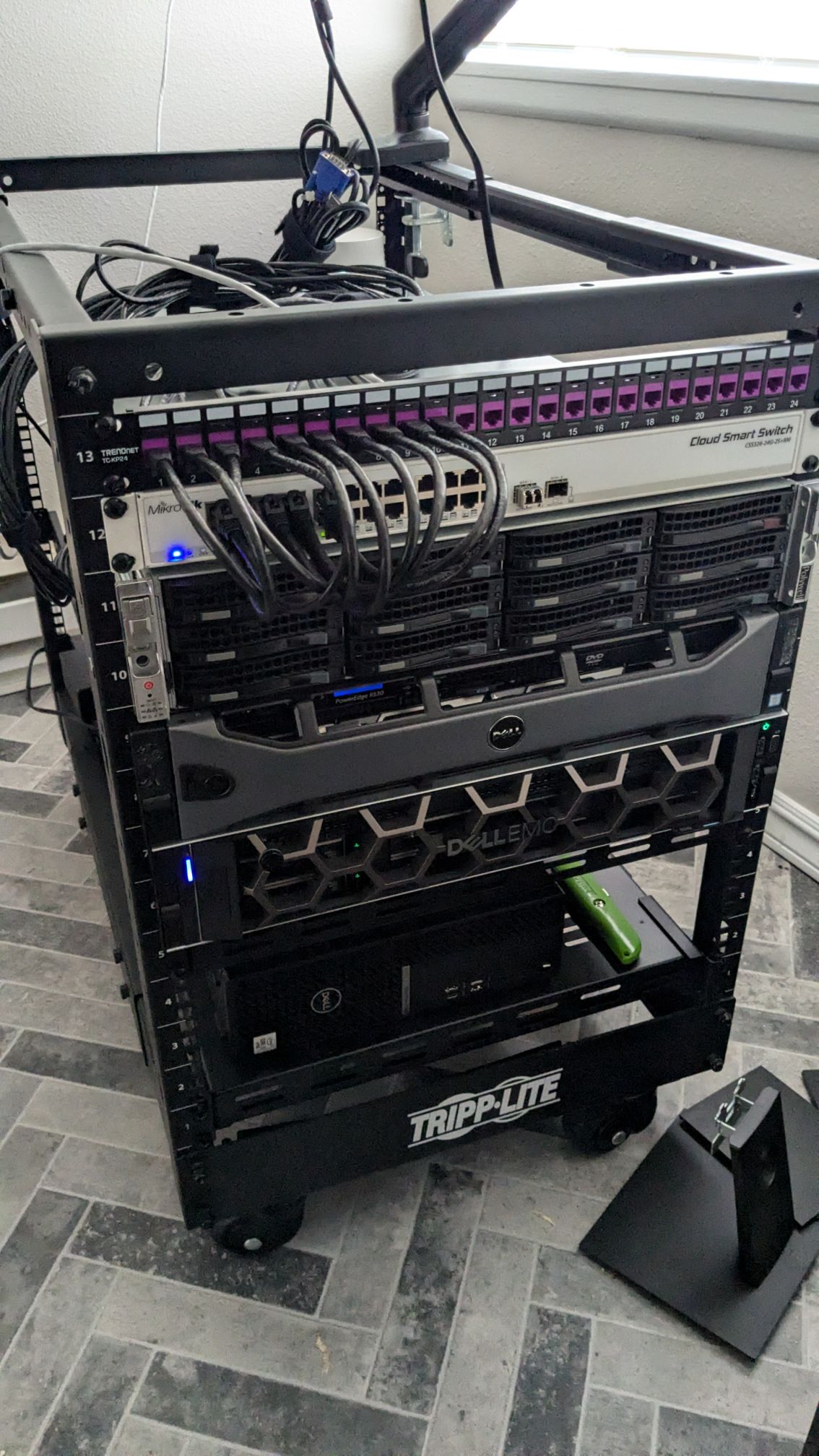

I’ve recently acquired some additions to the homelab server rack, and finally decided to clean up the rats nest that was my cabling.

Over the last few months I was able to snag a R540 and an R530. This allowed me to finally replace my old power sucking R610. Both of the new poweredges come with an H330 and H330 Mini(?) PERC cards, both also being capable of going into HBA mode for direct storage access. I opted for this as all three servers are now part of a Proxmox cluster and are utilizing ZFS pools. Despite the R610 sitting in my closest now, I added it to the cluster in the event one of these servers went down. I’m not particularly worried about maintaining quorum, but I may eventually add another device into the mix to have three proper nodes that can sustain a failure. In my the current configuration you see it in, if one server goes down, quorum is lost and my other cluster is forced into RO mode.

Currently I’m opting to use the R540 as the primary node in the cluster. It has all SSD storage, about 4TB worth of usable space. It’s a massive jump moving to full SSD storage from 2012 era rusty SAS on the R610. Overall, the hardware isn’t anything special on the R540 / R530, but I could eventually add a low profile GPU to these servers to really complete the package, and adding more disks of course. If you’re interested in the overall hardware, the R540 is a single socket Intel(R) Xeon(R) Silver 4110 CPU and the R530 contains a dual socket Intel(R) Xeon(R) CPU E5-2623 v3. Each have 32GB of DDR4. The R540 is completely SATA SSD storage, and the R530 is a few TBs worth of spinning disks.

Another addition (and decommissioning) was my previous firewall. I replaced the APU3D4 by PC Engines with a spare new gen Optiplex and some random NIC cards I found in the back of our storage room. With the upgrade to full symmetric fiber at my apartment, there wasn’t much reason to keep around the APU3D4. While it has great power consumption and some really neat features like integrated SIM support for a failover circuit, I wasn’t using it and the CPU was too anemic to handle 1GBe traffic through the WAN (although perf tests did show 1GbE on the LAN). I did a lot of optimizing before coming to the conclusion the juice wasn’t worth the squeeze, given my entire rack is pulling 3-5A at any given time, I don’t think the super low power draw was doing much of any cost saving, and probably wasn’t a good investment of my time. The Optiplex, not much to say. It’s entirely overkill for a dedicated firewall, but it runs everything I could want and more. It handles the VLANs, it has IPS on WAN and Zenarmor (not that great as a free user, but it does provide a good amount of insight into LAN traffic). Eventually I’d like to MiTM my LAN traffic to decrypt HTTPS traffic and monitor that way, but it’s a bit of overhead (lazy) and I’d need to add my authoritative certs to the local devices.

I think eventually I’ll introduce the Optiplex firewall into the mix as another cluster node and begin hosting my networking VMs on it rather than going with a baremetal OPNSense installation. Alternatively, I’d like to complete the EPYC Supermicro server, but I still need to purchase memory, the CPU, and some disks to get started. The new poweredges from work were ready to go and didn’t cost me a dime.

Finally, the last upgrade (and my favorite) is the addition of the new keystone panel. Previously I had the bundle of premade CAT6 running off the side of my switch around the server. It was ugly, tangled, and the “service loop” was always in the way of trying to rearrange stuff and working on the equipment. I got the keystone panel off amazon and a pack of CAT6 couplers (not punchdowns, I’m sorry /r/homelab). Now it sits in a very manageable bundle that I wrapped up, and it goes directly into the keystones with some nice 1ft patch cables.

If you’re interested in other parts of the homelab or my WiP Supermicro, you can checkout more here on my first post. https://ug24.co/2025/01/20/welcome/